![]()

Good by 2013 and hello 2014 along with predictions past, present and future

First, for those who may have missed this, thanks to all who helped make 2013 a great year!

Looking back at 2013 I saw a continued trend of more vendors and their media public relations (PR) people reaching out to have their predictions placed in articles, posts, columns or trends perspectives pieces.

Hmm, maybe a new trend is predictions selfies? ;)

Not to worry, this is not a wrapper piece for a bunch of those pitched and placed predictions requests that I received in 2013 as those have been saved for a rainy or dull day when we need to have some fun ;) .

What about 2013 server storage I/O networking, cloud, virtual and physical?

2013 end up with some end of year spree’s including Avago acquiring storage I/O and networking vendor LSI for about $6.6B USD (e.g. SSD cards, RAID cards, cache cards, HBA’s (Host Bus Adapters), chips and other items) along with Seagate buying Xyratex for about $374M USD (a Seagate suppliers and a customer partner).

Xyratex is known by some for making the storage enclosures that house hard disk drive (HDD’s) and Solid State Device (SSD) drives that are used by many well-known, and some not so well-known systems and solution vendors. Xyratex also has other pieces of their business such as appliances that combine their storage enclosures for HDD and SSD’s along with server boards, along with a software group focus on High Performance Compute (HPC) Lustre. There is another part of the Xyratex business that is not as well-known which is the test equipment used by disk drive manufacturers such as Seagate as part of their manufacturing process. Thus the Seagate acquisition moves them up market with more integrated solutions to offer to their (e.g. Seagate and Xyratex) joint customers, as well as streamline their own supply chain and costs (not to mention sell equipment to the other remaining drive manufactures WD and Toshiba).

![]()

Other 2013 acquisitions included (Whiptail by Cisco, Virident by WD (who also bought several other companies), Softlayer by IBM) along with various mergers, company launches, company shutdowns (cloud storage Nirvanix and SSD maker OCZ bankruptcy filing), and IPO’s (some did well like Nimble while Violin not so well), while earlier high-flying industry darlings such as FusionIO are now the high-flung darling targets of the shareholder sock lawsuit attorneys.

2013 also saw the end of SNW (Storage Network World), jointly produced by SNIA and Computerworld Storage in the US after more than a decade. Some perspectives from the last US SNW held October 2013 can be found in the Fall 2013 StorageIO Update Newsletter here, granted those were before the event was formal announced as being terminated.

Speaking of events, check out the November 2013 StorageIO Update Newsletter here for perspectives from attending the Amazon Web Services (AWS) re:Invent conference which joins VMworld, EMCworld and a bunch of other vendor world events.

Lets also not forget Dell buying itself in 2013.

Click on the following links read (and here) more about various 2013 industry perspectives trends commentary of mine in various venues, along with tips, articles, newsletters, events, pod cast, videos and other items.

What about 2014?

Perhaps 2014 will build on the 2013 momentum of the annual rights of pages refereed to as making meaningless future year trends and predictions as being passe?

Not that there is anything wrong with making predictions for the coming year, particular if they actually have some relevance, practicality not to mention track record.

However that past few years seems to have resulted in press releases along with product (or services) plugs being masked as predictions, or simply making the same predictions for the coming year that did not come to be for the earlier year (or the one before that or before that and so forth).

On the other hand, from an entertainment perspective, perhaps that’s where we will see annual predictions finally get classified and put into perspectives as being just that.

![]()

Now for those who still cling to as well as look forward to annual predictions, ok, simple, we will continue in 2014 (and beyond) from where we left off in 2013 (and 2012 and earlier) meaning more (or continued):

- Software defined "x" (replace "x" with your favorite topic) industry discussion adoption yet customer adoption or deployment question conversations.

- Cloud conversations shifted from lets all go to the cloud as the new shiny technology to questioning the security, privacy, stability, vendor or service viability not to mention other common sense concerns that should have been discussed or looked into earlier. I have also heard from people who say Amazon (as well as Verizon, Microsoft, Blue host, Google, Nirvanix, Yahoo and the list goes on) outages are bad for the image of clouds as they shake people’s confidences. IMHO people confidence needs to be shaken to that of having some common sense around clouds including don’t be scared, be ready, do your homework and basic due diligence. This means cloud conversations over concerns set the stage for increased awareness into decision-making, usage, deployment and best practices (all of which are good things for continued cloud deployments). However if some vendors or pundits feel that people having basic cloud concerns that can be addressed is not good for their products or services, I would like to talk with them because they may be missing an opportunity to create long-term confidence with their customers or prospects.

- VDI as a technology being deployed continues to grow (e.g. customer adoption) while the industry adoption (buzz or what’s being talked about) has slowed a bit which makes sense as vendors jump from one bandwagon to the new software defined bandwagon.

- Continued awareness around modernizing data protection including backup/restore, business continuance (BC), disaster recovery (DR), high availability, archiving and security means more than simply swapping out old technology for new, yet using it in old ways. After all, in the data center and information factory not everything is the same. Speaking of data protection, check out the series of technology neutral webcast and video chats that started last fall as part of BackupU brought to you by Dell. Even though Dell is the sponsor of the series (that’s a disclosure btw ;) ) the focus of the sessions is on how to use different tools, technologies and techniques in new ways as well as having the right tools for different tasks. Check out the information as well as register to get a free Data Protection chapter download from my book Cloud and Virtual Data Storage Networking (CRC Press) at the BackupU site as well as attend upcoming events.

- The nand flash solid state devices (SSD) cash-dash (and shakeout) continues with some acquisitions and IPO’s, as well as disappearances of some weaker vendors, while appearance of some new. SSD is showing that it is real in several ways (despite myths, fud and hype some of which gets clarified here) ranging from some past IPO vendors (e.g. FusiuonIO) seeing exit of their CEO and founders while their stock plummets and arrival of shareholder investor lawsuits, to Violins ho-hum IPO. What this means is that the market is real, it has a very bright future, however there is also a correction occurring showing that reality may be settling in for the long run (e.g. next couple of decades) vs. SSD being in the realm of unicorns.

- Internet of Things (IoT) and Internet of Devices (IoD) may give some relief for Big Data, BYOD, VDI, Software Defined and Cloud among others that need a rest after they busy usage that past few years. On the other hand, expect enhanced use of earlier buzzwords combined with IoT and IOD. Of course that also means plenty of questions around what is and is not IoD along with IoT and if actually relevant to what you are doing.

- Also in 2014 some will discover storage and related data infrastructure topics or some new product / service thus having a revolutionary experience that storage is now exciting while others will have a DejaVu moment that it has been exciting for the past several years if not decades.

- More big data buzz as well as realization by some that a pragmatic approach opens up a bigger broader market, not to mention customers more likely to realize they have more in common with big data than it simply being something new forcing them to move cautiously.

- To say that OpenStack and related technologies will continue to gain both industry and customer adoption (and deployment) status building off of 2013 in 2014 would be an understatement, not to mention too easy to say, or leave out.

- While SSD’s continue to gain in deployment, after the question is not if, rather when, where, with what and how much nand flash SSD is in your future, HDD’s continue to evolve for physical, virtual and cloud environments. This also includes Seagate announcing a new (Kinetic) Ethernet attached HDD (note that this is not a NAS or iSCSI device) that uses a new key value object storage API for storing content data (more on this in 2014).

- This also means realizing that large amounts of little data can result in back logs of lots of big data, and that big data is growing into very fast big data, not to mention realization by some that HDFS is just another distributed file system that happens to work with Hadoop.

- SOHO’s and lower end of SMB begin to get more respect (and not just during the week of Consumer Electronic Show – CES).

- Realization that there is a difference between Industry Adoption and Customer Deployment, not to mention industry buzz and traction vs. customer adoption.

![]()

![]()

What about beyond 2014?

That’s easy, many of the predictions and prophecies that you hear about for the coming year have also been pitched in prior years, so it only makes sense that some of those will be part of the future.

- If you have seen or experienced something you are more likely to have DejaVu.

- Otoh if you have not seen or experienced something you are more likely to have a new and revolutionary moment!

- Start using new (and old) things in new ways vs. simply using new things in old ways.

- Barrier to technology convergence, not to mention new technology adoption is often people or their organizations.

- Convergence is still around, cloud conversations around concerns get addressed leading to continued confidence for some.

- Realization that data infrastructure span servers, storage I/O networking, cloud, virtual, physical, hardware, software and services.

- That you can not have software defined without hardware and hardware defined requires software.

- And it is time for me to get a new book project (or two) completed in addition to helping others with what they are working on, more on this in the months to come…

Here’s my point

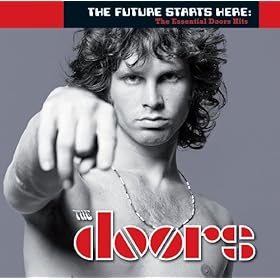

The late Jim Morrison of the Doors said "There are things known and things unknown and in between are the doors.".

Above image and link via Amazon.com

Hence there is what we know about 2013 or will learn about the past in the future, then there is what will be in 2014 as well as beyond, hence lets step through some doors and see what will be. This means learn and leverage lessons from the past to avoid making the same or similar mistakes in the future, however doing so while looking forward without a death grip clinging to the past.

Needless to say there will be more to review, preview and discuss throughout the coming year and beyond as we go from what is unknown through doors and learn about the known.

Thanks to all who made 2013 a great year, best wishes to all, look forward to seeing and hearing from you in 2014!

Ok, nuff said (for now)

Cheers

Gs

Greg Schulz – Author Cloud and Virtual Data Storage Networking (CRC Press), The Green and Virtual Data Center (CRC Press) and Resilient Storage Networks (Elsevier)

twitter @storageio

All Comments, (C) and (TM) belong to their owners/posters, Other content (C) Copyright 2006-2026 Server StorageIO and UnlimitedIO LLC All Rights Reserved