Cloud conversations: Has Nirvanix shutdown caused cloud confidence concerns?

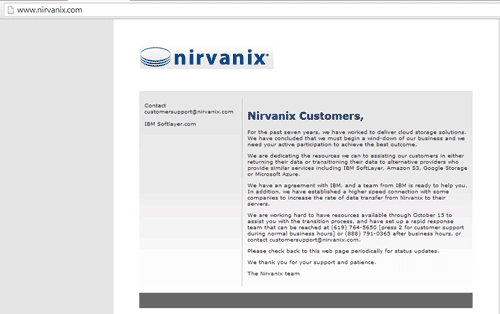

Recently seven plus year old cloud storage startup Nirvanix announced that they were finally shutting down and that customers should move their data.

Nirvanix has also posted an announcement that they have established an agreement with IBM Softlayer (read about that acquisition here) to help customers migrate to those services as well as to those of Amazon Web Services (AWS), (read more about AWS in this primer here), Google and Microsoft Azure.

Cloud customer concerns?

With Nirvanix shutting down there has been plenty of articles, blog posts, twitter tweets and other conversations asking if Clouds are safe.

Btw, here is a link to my ongoing poll where you can cast your vote on what you think about clouds.

IMHO clouds can be safe if used in safe ways which includes knowing and addressing your concerns, not to mention following best practices, some of which pre-date the cloud era, sometimes by a few decades.

Nirvanix Storm Clouds

More on this in a moment, however lets touch base on Nirvanix and why I said they were finally shutting down.

The reason I say finally shutting down is that there were plenty of early warning signs and storm clouds circling Nirvanix for a few years now.

What I mean by this is that in their seven plus years of being in business, there have been more than a few CEO changes, something that is not unheard of.

Likewise there have been some changes to their business model ranging from selling their software as a service to a solution to hosting among others, again, smart startups and establishes organizations will adapt over time.

Nirvanix also invested heavily in marketing, public relations (PR) and analyst relations (AR) to generate buzz along with gaining endorsements as do most startups to get recognition, followings and investors if not real customers on board.

In the case of Nirvanix, the indicator signs mentioned above also included what seemed like a semi-annual if not annual changing of CEOs, marketing and others tying into business model adjustments.

It was only a year or so ago that if you gauged a company health by the PR and AR news or activity and endorsements you would have believed Nirvanix was about to crush Amazon, Rackspace or many others, perhaps some actually did believe that, followed shortly there after by the abrupt departure of their then CEO and marketing team. Thus just as fast as Nirvanix seemed to be the phoenix rising in stardom their aura started to dim again, which could or should have been a warning sign.

This is not to solo out Nirvanix, however given their penchant for marketing and now what appears to some as a sudden collapse or shutdown, they have also become a lightning rod of sort for clouds in general. Given all the hype and fud around clouds when something does happen the distract ors will be quick to jump or pile on to say things like "See, I told you, clouds are bad".

Meanwhile the cloud cheerleaders may go into denial saying there are no problems or issues with clouds, or they may go back into a committee meeting to create a new stack, standard, API set marketing consortium alliance. ;) On the other hand, there are valid concerns with any technology including clouds that in general there are good implementations that can be used the wrong way, or questionable implementations and selections used in what seem like good ways that can go bad.

This is not to say that clouds in general whether as a service, solution or product on a public, private or hybrid bases are any riskier than traditional hardware, software and services. Instead what this should be is a wake up call for people and organizations to review clouds citing their concerns along with revisiting what to do or can be done about them.

Clouds: Being prepared

Ben Woo of Neuralytix posted this question comment to one of the Linked In groups Collateral Considerations If You Were/Are A Nirvanix Customer which I posted some tips and recommendations including:

| 1) If you have another copy of your data somewhere else (which you should btw), how will your data at Nirvanix be securely erased, and the storage it resides on be safely (and secure) decommissioned? 2) if you do have another copy of your data elsewhere, how current is it, can you bring it up to date from various sources (including update from Nirvanix while they stay online)? 3) Where will you move your data to short or near term, as well as long-term. 4) What changes will you make to your procurement process for cloud services in the future to protect against situations like this happening to you? 5) As part of your plan for putting data into the cloud, refine your strategy for getting it out, moving it to another service or place as well as having an alternate copy somewhere. Fwiw any data I put into a cloud service there is also another copy somewhere else which even though there is a cost, there is a benefit, The benefit is that ability to decide which to use if needed, as well as having a backup/spare copy. |

Cloud Concerns and Confidence

As part of cloud procurement as services or products, the same proper due diligence should occur as if you were buying traditional hardware, software, networking or services. That includes checking out not only the technology, also the companies financial, business records, customer references (both good and not so good or bad ones) to gain confidence. Part of gaining that confidence also involves addressing ahead of time how you will get your data out of or back from that services if needed.

Keep in mind that if your data is very important, are you going to keep it in just one place? For example I have data backed-up as well as archived to cloud providers, however I also have local copies either on-site or off.

Likewise there is data I have local kept at alternate locations including cloud. Sure that is costly, however by not treating all of my data and applications the same, I’m able to balance those costs out, plus use cost advantages of different services as well as on-site to be effective. I may be spending no less on data protection, in fact I’m actually spending a bit more, however I also have more copies and versions of important data and in multiple locations. Data that is not changing often does not get protected as often, however there are multiple copies to meet different needs or threat risks.

Don’t be scared of clouds, be prepared

While some of the other smaller cloud storage vendors will see some new customers, I suspect that near to mid-term, it will be the larger, more established and well funded providers that gain the most from this current situation. Granted some customers are looking for alternatives to the mega cloud providers such as Amazon, Google, HP, IBM, Microsoft and Rackspace among others, however there are a long list of others some of which who are not so well-known that should be such as Centurylink/Savvis, Verizon/Terremark, Sungurd, Dimension Data, Peak, Bluehost, Carbonite, Mozy (owned by EMC), Xerox ACS, Evault (owned by Seagate) not to mention a long list of many others.

Something to be aware of as part of doing your due diligence is determining who or what actually powers a particular cloud service. The larger providers such as Rackspace, Amazon, Microsoft, HP among others have their own infrastructure while some of the smaller service providers may in fact use one of the larger (or even smaller) providers as their real back-end. Hence understanding who is behind a particular cloud service is important to help decide the viability and stability of who it is you are subscribed to or working with.

Something that I have said for the past couple of years and a theme of my book Cloud and Virtual Data Storage Networking (CRC Taylor & Francis) is do not be scared of clouds, however be ready, do your homework.

This also means having cloud concerns is a good thing, again don’t be scared, however find what those concerns are along with if they are major or minor. From that list you can start to decide how or if they can be worked around, as well as be prepared ahead of time should you either need all of your cloud data back quickly, or should that service become un-available.

Also when it comes to clouds, look beyond lowest cost or for free, likewise if something sounds too good to be true, perhaps it is. Instead look for value or how much do you get per what you spend including confidence in the service, service level agreements (SLA), security, and other items.

Keep in mind, only you can prevent data loss either on-site or in the cloud, granted it is a shared responsibility (With a poll).

Additional related cloud conversation items:

Cloud conversations: AWS EBS Optimized Instances

Poll: What Do You Think of IT Clouds?

Cloud conversations: Gaining cloud confidence from insights into AWS outages

Cloud conversations: confidence, certainty and confidentiality

Cloud conversation, Thanks Gartner for saying what has been said

Cloud conversations: AWS EBS, Glacier and S3 overview (Part III)

Cloud conversations: Gaining cloud confidence from insights into AWS outages (Part II)

Don’t Let Clouds Scare You – Be Prepared

Everything Is Not Equal in the Datacenter, Part 3

Amazon cloud storage options enhanced with Glacier

What do VARs and Clouds as well as MSPs have in common?

How many degrees separate you and your information?

Ok, nuff said.

Cheers

Gs

Greg Schulz – Author Cloud and Virtual Data Storage Networking (CRC Press), The Green and Virtual Data Center (CRC Press) and Resilient Storage Networks (Elsevier)

All Comments, (C) and (TM) belong to their owners/posters, Other content (C) Copyright 2006-2026 Server StorageIO and UnlimitedIO LLC All Rights Reserved

![]()