Use NVMe U.2 SFF 8639 disk drive form factor SSD in PCIe slot

Need to install or use an Intel Optane NVMe 900P or other Nonvolatile Memory (NVM) Express NVMe based U.2 SFF 8639 disk drive form factor Solid State Device (SSD) into PCIe a slot?

For example, I needed to connect an Intel Optane NVMe 900P U.2 SFF 8639 drive form factor SSD into one of my servers using an available PCIe slot.

The solution I used was an carrier adapter card such as those from Ableconn (PEXU2-132 NVMe 2.5-inch U.2 [SFF-8639] via Amazon.com among other global venues.

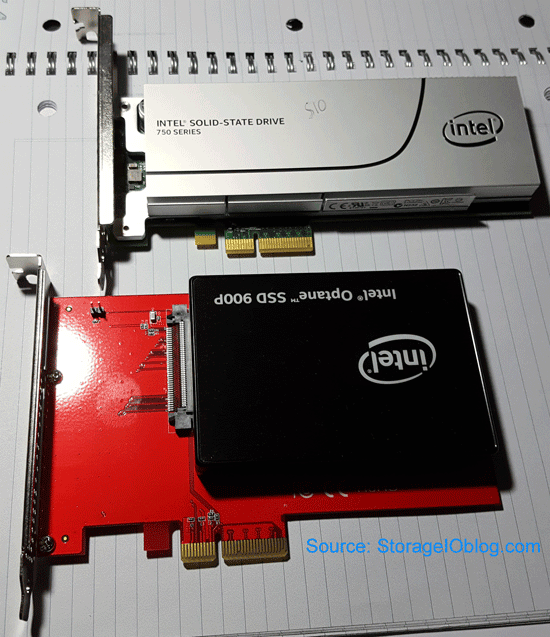

Top Intel 750 NVMe PCIe AiC SSD, bottom Intel Optane NVMe 900P U.2 SSD with Ableconn carrier

The above image shows top an Intel 750 NVMe PCIe Add in Card (AiC) SSD and on the bottom an Intel Optane NVMe 900P 280GB U.2 (SFF 8639) drive form factor SSD mounted on an Ableconn carrier adapter.

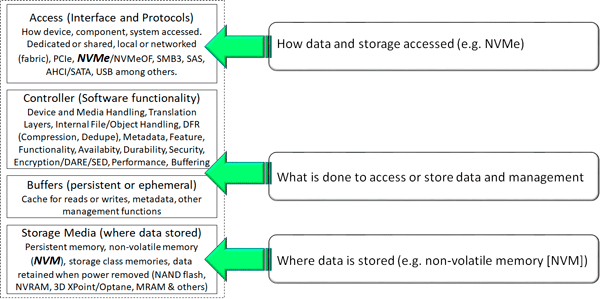

NVMe Tradecraft Refresher

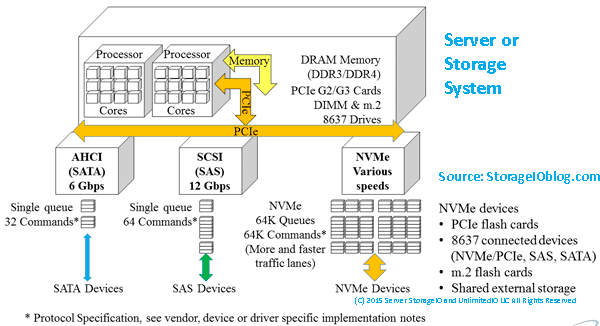

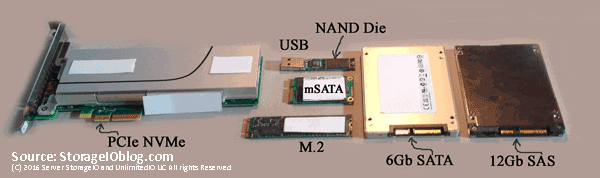

NVMe is the protocol that is implemented with different topologies including local via PCIe using U.2 aka SFF-8639 (aka disk drive form factor), M.2 aka Next Generation Form Factor (NGFF) also known as "gum stick", along with PCIe Add in Card (AiC). NVMe accessed devices can be installed in laptops, ultra books, workstations, servers and storage systems using the various form factors. U.2 drives are also refereed to by some as PCIe drives in that the NVMe command set protocol is implemented using PCIe x4 physical connection to the devices. Jump ahead if you want to skip over the NVMe primer refresh material to learn more about U.2 8639 devices.

Various SSD device form factors and interfaces

In addition to form factor, NVMe devices can be direct attached and dedicated, rack and shared, as well as accessed via networks also known as fabrics such as NVMe over Fabrics.

The many facets of NVMe as a front-end, back-end, direct attach and fabric

Context is important with NVMe in that fabric can mean NVMe over Fibre Channel (FC-NVMe) where the NVMe command set protocol is used in place of SCSI Fibre Channel Protocol (e.g. SCSI_FCP) aka FCP or what many simply know and refer to as Fibre Channel. NVMe over Fabric can also mean NVMe command set implemented over an RDMA over Converged Ethernet (RoCE) based network.

Another point of context is not to confuse Nonvolatile Memory (NVM) which are the storage or memory media and NVMe which is the interface for accessing storage (e.g. similar to SAS, SATA and others). As a refresher, NVM or the media are the various persistent memories (PM) including NVRAM, NAND Flash, 3D XPoint along with other storage class memories (SCM) used in SSD (in various packaging).

Learn more about 3D XPoint with the following resources:

Learn more (or refresh) your NVMe server storage I/O knowledge, experience tradecraft skill set with this post here. View this piece here looking at NVM vs. NVMe and how one is the media where data is stored, while the other is an access protocol (e.g. NVMe). Also visit www.thenvmeplace.com to view additional NVMe tips, tools, technologies, and related resources.

NVMe U.2 SFF-8639 aka 8639 SSD

On quick glance, an NVMe U.2 SFF-8639 SSD may look like a SAS small form factor (SFF) 2.5" HDD or SSD. Also, keep in mind that HDD and SSD with SAS interface have a small tab to prevent inserting them into a SATA port. As a reminder, SATA devices can plug into SAS ports, however not the other way around which is what the key tab function does (prevents accidental insertion of SAS into SATA). Looking at the left-hand side of the following image you will see an NVMe SFF 8639 aka U.2 backplane connector which looks similar to a SAS port.

Note that depending on how implemented including its internal controller, flash translation layer (FTL), firmware and other considerations, an NVMe U.2 or 8639 x4 SSD should have similar performance to a comparable NVMe x4 PCIe AiC (e.g. card) device. By comparable device, I mean the same type of NVM media (e.g. flash or 3D XPoint), FTL and controller. Likewise generally an PCIe x8 should be faster than an x4, however more PCIe lanes does not mean more performance, its what’s inside and how those lanes are actually used that matter.

NVMe U.2 SFF 8639 Drive (Software Defined Data Infrastructure Essentials CRC Press)

With U.2 devices the key tab that prevents SAS drives from inserting into a SATA port is where four pins that support PCIe x4 are located. What this all means is that a U.2 8639 port or socket can accept an NVMe, SAS or SATA device depending on how the port is configured. Note that the U.2 8639 port is either connected to a SAS controller for SAS and SATA devices or a PCIe port, riser or adapter.

On the left of the above figure is a view towards the backplane of a storage enclosure in a server that supports SAS, SATA, and NVMe (e.g. 8639). On the right of the above figure is the connector end of an 8639 NVM SSD showing addition pin connectors compared to a SAS or SATA device. Those extra pins give PCIe x4 connectivity to the NVMe devices. The 8639 drive connectors enable a device such as an NVM, or NAND flash SSD to share a common physical storage enclosure with SAS and SATA devices, including optional dual-pathing.

More PCIe lanes may not mean faster performance, verify if those lanes (e.g. x4 x8 x16 etc) are present just for mechanical (e.g. physical) as well as electrical (they are also usable) and actually being used. Also, note that some PCIe storage devices or adapters might be for example an x8 for supporting two channels or devices each at x4. Likewise, some devices might be x16 yet only support four x4 devices.

NVMe U.2 SFF 8639 PCIe Drive SSD FAQ

Some common questions pertaining NVMe U.2 aka SFF 8639 interface and form factor based SSD include:

Why use U.2 type devices?

Compatibility with what’s available for server storage I/O slots in a server, appliance, storage enclosure. Ability to mix and match SAS, SATA and NVMe with some caveats in the same enclosure. Support higher density storage configurations maximizing available PCIe slots and enclosure density.

Is PCIe x4 with NVMe U.2 devices fast enough?

While not as fast as a PCIe AiC that fully supports x8 or x16 or higher, an x4 U.2 NVMe accessed SSD should be plenty fast for many applications. If you need more performance, then go with a faster AiC card.

Why not go with all PCIe AiC?

If you need the speed, simplicity, have available PCIe card slots, then put as many of those in your systems or appliances as possible. Otoh, some servers or appliances are PCIe slot constrained so U.2 devices can be used to increase the number of devices attached to a PCIe backplane while also supporting SAS, SATA based SSD or HDDs.

Why not use M.2 devices?

If your system or appliances supports NVMe M.2 those are good options. Some systems even support a combination of M.2 for local boot, staging, logs, work and other storage space while PCIe AiC are for performance along with U.2 devices.

Why not use NVMeoF?

Good question, why not, that is, if your shared storage system supports NVMeoF or FC-NVMe go ahead and use that, however, you might also need some local NVMe devices. Likewise, if yours is a software-defined storage platform that needs local storage, then NVMe U.2, M.2 and AiC or custom cards are an option. On the other hand, a shared fabric NVMe based solution may support a mixed pool of SAS, SATA along with NVMe U.2, M.2, AiC or custom cards as its back-end storage resources.

When not to use U.2?

If your system, appliance or enclosure does not support U.2 and you do not have a need for it. Or, if you need more performance such as from an x8 or x16 based AiC, or you need shared storage. Granted a shared storage system may have U.2 based SSD drives as back-end storage among other options.

How does the U.2 backplane connector attach to PCIe?

Via enclosures backplane, there is either a direct hardwire connection to the PCIe backplane, or, via a connector cable to a riser card or similar mechanism.

Does NVMe replace SAS, SATA or Fibre Channel as an interface?

The NVMe command set is an alternative to the traditional SCSI command set used in SAS and Fibre Channel. That means it can replace, or co-exist depending on your needs and preferences for access various storage devices.

Who supports U.2 devices?

Dell has supported U.2 aka PCIe drives in some of their servers for many years, as has Intel and many others. Likewise, U.2 8639 SSD drives including 3D Xpoint and NAND flash-based are available from Intel among others.

Can you have AiC, U.2 and M.2 devices in the same system?

If your server or appliance or storage system support them then yes. Likewise, there are M.2 to PCIe AiC, M.2 to SATA along with other adapters available for your servers, workstations or software-defined storage system platform.

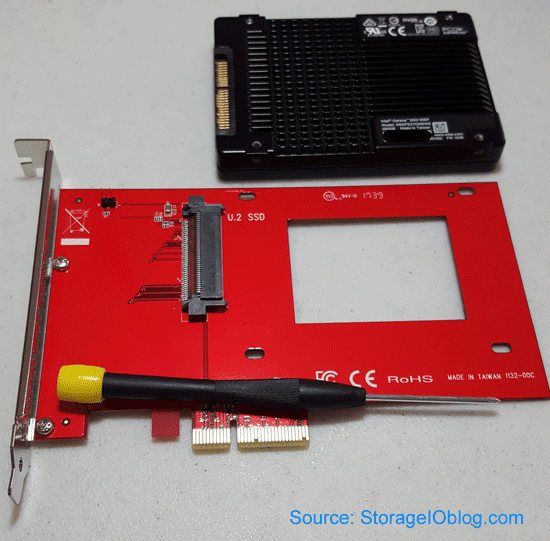

NVMe U.2 carrier to PCIe adapter

The following images show examples of mounting an Intel Optane NVMe 900P accessed U.2 8639 SSD on an Ableconn PCIe AiC carrier. Once U.2 SSD is mounted, the Ableconn adapter inserts into an available PCIe slot similar to other AiC devices. From a server or storage appliances software perspective, the Ableconn is a pass-through device so your normal device drivers are used, for example VMware vSphere ESXi 6.5 recognizes the Intel Optane device, similar with Windows and other operating systems.

Intel Optane NVMe 900P U.2 SSD and Ableconn PCIe AiC carrier

The above image shows the Ableconn adapter carrier card along with NVMe U.2 8639 pins on the Intel Optane NVMe 900P.

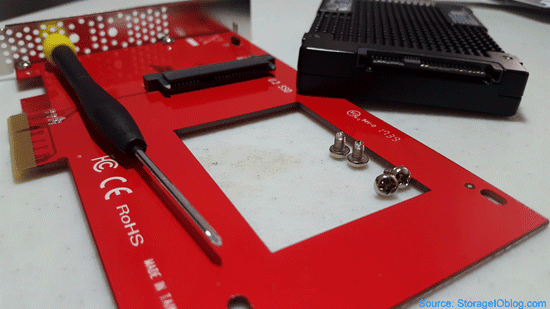

Views of Intel Optane NVMe 900P U.2 8639 and Ableconn carrier connectors

The above image shows an edge view of the NVMe U.2 SFF 8639 Intel Optane NVMe 900P SSD along with those on the Ableconn adapter carrier. The following images show an Intel Optane NVMe 900P SSD installed in a PCIe AiC slot using an Ableconn carrier, along with how VMware vSphere ESXi 6.5 sees the device using plug and play NVMe device drivers.

Intel Optane NVMe 900P U.2 SSD installed in PCIe AiC Slot

How VMware vSphere ESXi 6.5 sees NVMe U.2 device

Intel NVMe Optane NVMe 3D XPoint based and other SSDs

Here are some Amazon.com links to various Intel Optane NVMe 3D XPoint based SSDs in different packaging form factors:

Here are some Amazon.com links to various Intel and other vendor NAND flash based NVMe accessed SSDs including U.2, M.2 and AiC form factors:

Note in addition to carriers to adapt U.2 8639 devices to PCIe AiC form factor and interfaces, there are also M.2 NGFF to PCIe AiC among others. An example is the Ableconn M.2 NGFF PCIe SSD to PCI Express 3.0 x4 Host Adapter Card.

In addition to Amazon.com, Newegg.com, Ebay and many other venues carry NVMe related technologies.

The Intel Optane NVMe 900P are newer, however the Intel 750 Series along with other Intel NAND Flash based SSDs are still good price performers and as well as provide value. I have accumulated several Intel 750 NVMe devices over past few years as they are great price performers. Check out this related post Get in the NVMe SSD game (if you are not already).

Where To Learn More

View additional NVMe, SSD, NVM, SCM, Data Infrastructure and related topics via the following links.

- NVMe overview and primer – Part I

- Part II – NVMe overview and primer (Different Configurations)

- Part III – NVMe overview and primer (Need for Performance Speed)

- Part IV – NVMe overview and primer (Where and How to use NVMe)

- Part V – NVMe overview and primer (Where to learn more, what this all means)

- New family of Intel Xeon Scalable Processors enable software defined data infrastructures and SDDC

- PCIe Server I/O Fundamentals

- Get in the NVMe SSD game (if you are not already) with Intel 750 among others

- If NVMe is the answer, what are the questions?

- NVMe Wont Replace Flash By Itself

- Via Computerweekly – NVMe discussion: PCIe card vs U.2 and M.2

- Intel and Micron unveil new 3D XPoint Non Volatile Memory (NVM) for servers and storage

- Part II – Intel and Micron new 3D XPoint server and storage NVM

- Part III – 3D XPoint new server storage memory from Intel and Micron

- Server storage I/O benchmark tools, workload scripts and examples (Part I) and (Part II)

- Data Infrastructure Overview, Its What’s Inside of Data Centers

- Data Infrastructure Resource Links cloud data protection tradecraft trends

- Software Defined, Converged Infrastructure (CI), Hyper-Converged Infrastructure (HCI) resources

- The SSD Place (SSD, NVM, PM, SCM, Flash, NVMe, 3D XPoint, MRAM and related topics)

- The NVMe Place (NVMe related topics, trends, tools, technologies, tip resources)

Additional learning experiences along with common questions (and answers), as well as tips can be found in Software Defined Data Infrastructure Essentials book.

What This All Means

NVMe accessed storage is in your future, however there are various questions to address including exploring your options for type of devices, form factors, configurations among other topics. Some NVMe accessed storage is direct attached and dedicated in laptops, ultrabooks, workstations and servers including PCIe AiC, M.2 and U.2 SSDs, while others are shared networked aka fabric based. NVMe over fabric (e.g. NVMeoF) includes RDMA over converged Ethernet (RoCE) as well as NVMe over Fibre Channel (e.g. FC-NVMe). Networked fabric accessed NVMe access of pooled shared storage systems and appliances can also include internal NVMe attached devices (e.g. as part of back-end storage) as well as other SSDs (e.g. SAS, SATA).

General wrap-up (for now) NVMe U.2 8639 and related tips include:

- Verify the performance of the device vs. how many PCIe lanes exist

- Update any applicable BIOS/UEFI, device drivers and other software

- Check the form factor and interface needed (e.g. U.2, M.2 / NGFF, AiC) for a given scenario

- Look carefully at the NVMe devices being ordered for proper form factor and interface

- With M.2 verify that it is an NVMe enabled device vs. SATA

Learn more about NVMe at www.thenvmeplace.com including how to use Intel Optane NVMe 900P U.2 SFF 8639 disk drive form factor SSDs in PCIe slots as well as for fabric among other scenarios.

Ok, nuff said, for now.

Gs

Greg Schulz – Microsoft MVP Cloud and Data Center Management, VMware vExpert 2010-2017 (vSAN and vCloud). Author of Software Defined Data Infrastructure Essentials (CRC Press), as well as Cloud and Virtual Data Storage Networking (CRC Press), The Green and Virtual Data Center (CRC Press), Resilient Storage Networks (Elsevier) and twitter @storageio. Courteous comments are welcome for consideration. First published on https://storageioblog.com any reproduction in whole, in part, with changes to content, without source attribution under title or without permission is forbidden.

All Comments, (C) and (TM) belong to their owners/posters, Other content (C) Copyright 2006-2026 Server StorageIO and UnlimitedIO. All Rights Reserved. StorageIO is a registered Trade Mark (TM) of Server StorageIO.

![]()