This is the second of a two part series pertaining to EMC VFCache, you can read the first part here.

In this part of the series, lets look at some common questions along with comments and perspectives.

Common questions, answers, comments and perspectives:

Why would EMC not just go into the same market space and mode as FusionIO, a model that many other vendors seam eager to follow? IMHO many vendors are following or chasing FusionIO thus most are selling in the same way perhaps to the same customers. Some of those vendors can very easily if they were not already also make a quick change to their playbook adding some new moves to reach broader audience.

Another smart move here is that by taking a companion or complimentary approach is that EMC can continue selling existing storage systems to customers, keep those investments while also supporting competitors products. In addition, for those customers who are slow to adopt the SSD based techniques, this is a relatively easy and low risk way to gain confidence. Granted the disk drive was declared dead several years (and yes also several decades) ago, however it is and will stay alive for many years due to SSD helping to close the IO storage and performance gap.

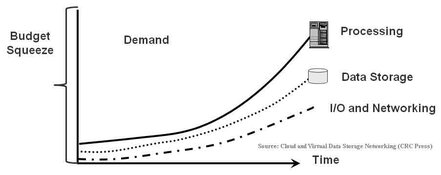

Data center and storage IO performance capacity gap (Courtesy of Cloud and Virtual Data Storage Networking (CRC Press))

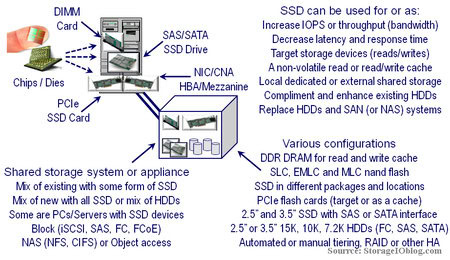

Has this been done before? There have been other vendors who have done LUN caching appliances in the past going back over a decade. Likewise there are PCIe RAID cards that support flash SSD as well as DRAM based caching. Even NetApp has had similar products and functionality with their PAM cards.

Does VFCache work with other PCIe SSD cards such as FusionIO? No, VFCache is a combination of software IO intercept and intelligent cache driver along with a PCIe SSD flash card (which could be supplied as EMC has indicated from different manufactures). Thus VFCache to be VFCache requires the EMC IO intercept and intelligent cache software driver.

Does VFCache work with other vendors storage? Yes, Refer to the EMC support matrix, however the product has been architected and designed to install and coexist into a customers existing environment which means supporting different EMC block storage systems as well as those from other vendors. Keep in mind that a main theme of VFCache is to compliment, coexist, enhance and protect customers investments in storage systems to improve their effectiveness and productivity as opposed to replacing them.

Does VFCache introduce a new point of vendor lockin or stickiness? Some will see or place this as a new form of vendor lockin, others assuming that EMC supports different vendors storage systems downstream as well as offer options for different PCIe flash cards and keeps the solution affordable will assert it is no more lockin that other solutions. In fact by supporting third party storage systems as opposed to replacing them, smart sales people and marketeers will place VFCache as being more open and interoperable than some other PCIe flash card vendors approach. Keep in mind that avoiding vendor lockin is a shared responsibility (read more here).

Does VFCache work with NAS? VFCache does not work with NAS (NFS or CIFS) attached storage.

Does VFCache work with databases? Yes, VFCache is well suited for little data (e.g. database) and traditional OLTP or general business application process that may not be covered or supported by other so called big data focused or optimized solutions. Refer to this EMC document (and this document here) for more information.

Does VFCache only work with little data? While VFCache is well suited for little data (e.g. databases, share point, file and web servers, traditional business systems) it also able to work with other forms of unstructured data.

Does VFCache need VMware? No, While VFCache works with VMware vSphere including a vCenter plug in, however it does not need a hypervisor and is practical in a physical machine (PM) as it is in a virtual machine (VM).

Does VFCache work with Microsoft Windows? Yes, Refer to the EMC support matrix for specific server operating systems and hypervisor version support.

Does VFCache work with other unix platforms? Refer to the EMC support matrix for specific server operating systems and hypervisor version support.

How are reads handled with VFCache? The VFCache software (driver if you prefer) intercepts IO requests to LUNs that are being cached performing a quick lookup to see if there is a valid cache entry in the physical VFCache PCIe card. If there is a cache hit the IO is resolved from the closer or local PCIe card cache making for a lower latency or faster response time IO. In the case of a cache miss, the VFCache driver simply passes the IO request onto the normal SCSI or block (e.g. iSCSI, SAS, FC, FCoE) stack for processing by the downstream storage system (or appliance). Note that when the requested data is retrieved from the storage system, the VFCache driver will based on caching algorithms determinations place a copy of the data in the PCIe read cache. Thus the real power of the VFCache is the software implementing the cache lookup and cache management functions to leverage the PCIe card that complements the underlying block storage systems.

How are writes handled with VFCache? Unless put into a write cache mode which is not the default, VFCache software simply passes the IO operation onto the IO stack for downstream processing by the storage system or appliance attached via a block interface (e.g. iSCSI, SAS, FC, FCoE). Note that as part of the caching algorithms, the VFCache software will make determinations of what to keep in cache based on IO activity requests similar to how cache management results in better cache effectiveness in a storage system. Given EMCs long history of working with intelligent cache algorithms, one would expect some of that DNA exists or will be leveraged further in future versions of the software. Ironically this is where other vendors with long cache effectiveness histories such as IBM, HDS and NetApp among others should also be scratching their collective heads saying wow, we can or should be doing that as well (or better).

Can VFCache be used as a write cache? Yes, while its default mode is to be used as a persistent read cache to compliment server and application buffers in DRAM along with enhance effectiveness of downstream storage system (or appliances) caches, VFCache can also be configured as a persistent write cache.

Does VFCache include FAST automated tiering between different storage systems? The first version is only a caching tool, however think about it a bit, where the software sits, what storage systems it can work with, ability to learn and understand IO paths and patterns and you can get an idea of where EMC could evolve it to, similar to what they have done with recoverpoint among other tools.

Evolving data access patterns and life cycles (more retention and reads)

Does VFCache mean all or nothing approach with EMC? While the complete VFCache solution comes from EMC (e.g. PCIe card and software), the solution will work with other block attached storage as well as existing EMC storage systems for investment protection.

Does VFCache support NAS based storage systems? The first release of VFCache only supports block based access, however the server that VFCache is installed in could certainly be functioning as a general purpose NAS (NFS or CIFS) server (see supported operating systems in EMC interoperability notes) in addition to being a database or other other application server.

Does VFCache require that all LUNs be cached? No, you can select which LUNs are cached and which ones are not.

Does VFCache run in an active / active mode? In the first release it is active passive, refer to EMC release notes for details.

Can VFCache be installed in multiple physical servers accessing the same shared storage system? Yes, however refer to EMC release notes on details about active / active vs. active / passive configuration rules for ensuring data integrity.

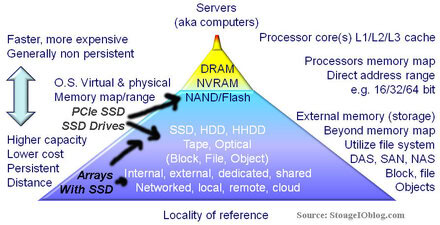

Who else is doing things like this? There are caching appliance vendors as well as others such as NetApp and IBM who have used SSD flash caching cards in their storage systems or virtualization appliances. However keep in mind that VFCache is placing the caching function closer to the application that is accessing it there by improving on the locality of reference (e.g. storage and IO effectiveness).

Does VFCache work with SSD drives installed in EMC or other storage systems? Check the EMC product support matrix for specific tested and certified solutions, however in general if the SSD drive is installed in a storage system that is supported as a block LUN (e.g. iSCSI, SAS, FC, FCoE) in theory it should be possible to work with VFCache. Emphasis, visit the EMC support matrix.

What type of flash is being used?

What type of nand flash SSD memory is EMC using in the PCIe card? The first release of VFCache is leveraging enterprise class SLC (Single Level Cell) nand flash which has been used in other EMC products for its endurance, long duty cycle to minnimize or eliminate concerns of wear and tear while meeting read and write performance. EMC has indicated that they will also as part of an industry trend leverage MLC along with Enterprise MLC (EMLC) technologies on a go forward basis.

Doesnt nand ssd flash cache wear out? While nand flash SSD can wear out over time due to extensive write use, the VFCache approach mitigates this by being primarily a read cache reducing the number or program / erase cycles (P/E cycles) that occur with write operations as well as initially leveraging longer duty cycle SLC flash. EMC also has several years experience from implementing wear leveling algorithms into the storage systems controllers to increase duty cycle and reduce wear on SLC flash which will play forward as MLC or Enterprise MLC (EMLC) techniques are leveraged. This differs from vendors who are positioning their SLC or MLC based flash PCIe SSD cards for mainly write operations which will cause more P/E cycles to occur at a faster rate reducing the duty or useful life of the device.

How much capacity does the VFCache PCIe card contain? The first release supports a 300GB card and EMC has indicated that added capacity and configuration options are in their plans.

Does this mean disks are dead? Contrary to popular industry folk lore (or wish) the hard disk drive (HDD) has plenty of life left part of which has been increased by being complimented by VFCache.

Various SSD locations, types, packaging and usage scenario options

Can VFCache work in blade servers? The VFCache software is transparent to blade, rack mount, tower or other types of servers. The hardware part of VFCache is a PCIe card which means that the blade server or system will need to be able to accommodate a PCIe card to compliment the PCIe based mezzaine IO card (e.g. iSCSI, SAS, FC, FCOE) used for accessing storage. What this means is that for blade systems or server vendors such as IBM who have a PCIe expansion module for their H series blade systems (it consumes a slot normally used by a server blade), PCIe cache cards like those being initially released by IBM could work, however check with the EMC interoperability matrix, as well as your specific blade server vendor for PCIe expansion capabilities. Given that EMC leverages Cisco UCS for their vBlocks, one would assume that those systems will also see VFCache modules in those systems. NetApp partners with Cisco using UCS in their FlexPods so you see where that could go as well along with potential other server vendors support including Dell, HP, IBM and Oracle among others.

What about benchmarks? EMC has released some technical documents that show performance improvements in Oracle environments such as this here. Hopefully we will see EMC also release other workloads for different applications including Microsoft Exchange Solutions Proven (ESRP) along with SPC similar to what IBM recently did with their systems among others.

How do the first EMC supplied workload simulations compare vs. other PCIe cards? This is tough to gauge as many SSD solutions and in particular PCIe cards are doing apples to oranges comparisons. For example to generate a high IOPs rating for marketing purposes, most SSD solutions are stress performance tested at 512 bytes or 1/2 of a KByte or at least 1/8 of a small 4Kbyte IO. Note that operating systems such as Windows are moving to 4Kbyte page allocation size to align with growing IO sizes with databases moving from the old average of 4Kbytes to 8Kbytes and larger. What is important to consider is what is the average IO size and activity profile (e.g. reads vs. writes, random vs. sequential) for your applications. If your application is doing ultra small 1/2 Kbyte IOs, or even smaller 64 byte IOs (which should be handled by better application or file system caching in DRAM), then the smaller IO size and record setting examples will apply. However if your applications are more mainstream or larger, then those smaller IO size tests should be taken with a grain of salt. Also keep latency in mind that many target or oppourtunity applications for VFCache are response time sensitive or can benefit by the improved productivity they enable.

What is locality of reference? Locality of reference refers to how close data is to where it is being requested or accessed from. The closer the data to the application requesting the faster the response time or quick the work gets done. For example in the figure below L1/L2/L3 on board processor caches are the fastest, yet smallest while closest to the application running on the server. At the other extreme further down the stack, storage becomes large capacity, lower cost, however lower performing.

What does cache effectiveness vs. cache utilization mean? Cache utilization is an indicator of how much the available cache capacity is being used however it does not give an indicator of if the cache is being well used or not. For example, cache could be 100 percent used, however there could be a low hit rate. Thus cache effectiveness is a gauge of how well the available cache is being used to improve performance in terms of more work being done (IOPS or bandwidth) or lower of latency and response time.

Isnt more cache better? More cache is not better, it is how the cache is being used, this is a message that I would be disappointed in HDS if they were not to bring up as a point of messaging (or rebuttal) given their history of emphasis cache effectiveness vs. size or quantity (Hu, that is a hint btw ;).

What is the performance impact of VFCache on the host server? EMC is saying greatest of 5 percent or less CPU consumption which they claim is several times less than the competitions worst scenario, as well as claiming 512MB to 1GB of DRM on the server vs. several times that of their competitors. The difference could be expected to be via more off load functioning including flash translation layer (FTL), wear leveling and other optimization being handled by the PCIe card vs. being handled in the servers memory and using host server CPU cycles.

How does this compare to what NetApp or IBM does? NetApp, IBM and others have done caching with SSD in their storage systems, or leveraging third party PCIe SSD cards from different vendors to be installed in servers to be used as a storage target. Some vendors such as LSI have done caching on the PCIe cards (e.g. CacheCaid which in theory has a similar software caching concept to VFCache) to improve performance and effectiveness across JBOD and SAS devices.

What about stale (old or invalid) reads, how does VFCache handle or protect against those? Stale reads are handled via the VFCache management software tool or driver which leverages caching algorithms to decide what is valid or invalid data.

How much does VFCache cost? Refer to EMC announcement pricing, however EMC has indicated that they will be competitive with the market (supply and demand).

If a server shutdowns or reboots, what happens to the data in the VFCache? Being that the data is in non volatile SLC nand flash memory, information is not lost when the server reboots or loses power in the case of a shutdown, thus it is persistent. While exact details are not know as of this time, it is expected that the VFCache driver and software do some form of cache coherency and validity check to guard against stale reads or discard any other invalid cache entries.

What will EMC do with VFCache in the future and on a larger scale such as an appliance? EMC via its own internal development and via acquisitions has demonstrated ability to use various clustered techniques such as RapidIO for VMAX nodes, InfiniBand for connecting Isilon nodes. Given an industry trend with several startups using PCIe flash cards installed in a server that then functions as a IO storage system, it seems likely given EMCs history and experience with different storage systems, caching, and interconnects that they could do something interesting. Perhaps Oracle Exadata III (Exadata I was HP, Exadata II was Sun/Oracle) could be an EMC based appliance (That is pure speculation btw)?

EMC has already shown how it can use SSD drives as a cache extension in VNX and CLARiiON servers ( FAST CACHE ) in addition to as a target or storage tier combined with Fast for tiering. Given their history with caching algorithms, it would not be surprising to see other instantiations of the technology deployed in complimentary ways.

Finally, EMC is showing that it can use nand flash SSD in different ways, various packaging forms to apply to diverse applications or customer environments. The companion or complimentary approach EMC is currently taking contrasts with some other vendors who are taking an all or nothing, its all SSD as disk is dead approach. Given the large installed base of disk based systems EMC as well as other vendors have in place, not to mention the investment by those customers, it makes sense to allow those customers the option of when, where and how they can leverage SSD technologies to coexist and complement their environments. Thus with VFCache, EMC is using SSD as a cache enabler to discuss the decades old and growing storage IO to capacity performance gap in a force multiplier model that spreads the cost over more TBytes, PBytes or EBytes while increasing the overall benefit, in other words effectiveness and productivity.

Additional related material:

Part I: EMC VFCache respinning SSD and intelligent caching

IT and storage economics 101, supply and demand

2012 industry trends perspectives and commentary (predictions)

Speaking of speeding up business with SSD storage

New Seagate Momentus XT Hybrid drive (SSD and HDD)

Are Hard Disk Drives (HDDs) getting too big?

Unified storage systems showdown: NetApp FAS vs. EMC VNX

Industry adoption vs. industry deployment, is there a difference?

Two companies on parallel tracks moving like trains offset by time: EMC and NetApp

Data Center I/O Bottlenecks Performance Issues and Impacts

From bits to bytes: Decoding Encoding

Who is responsible for vendor lockin

EMC VPLEX: Virtual Storage Redefined or Respun?

EMC interoperabity support matrix

Ok, nuff said for now, I think I see some storm clouds rolling in…

Cheers gs

Greg Schulz – Author Cloud and Virtual Data Storage Networking (CRC Press, 2011), The Green and Virtual Data Center (CRC Press, 2009), and Resilient Storage Networks (Elsevier, 2004)

twitter @storageio

All Comments, (C) and (TM) belong to their owners/posters, Other content (C) Copyright 2006-2012 StorageIO and UnlimitedIO All Rights Reserved

![]()