Is I/O Virtualization (IOV) a server topic, a network topic, or a storage topic (See previous post)?

Like server virtualization, IOV involves servers, storage, network, operating system, and other infrastructure resource management areas and disciplines. The business and technology value proposition or benefits of converged I/O networks and I/O virtualization are similar to those for server and storage virtualization.

Additional benefits of IOV include:

- Doing more with what resources (people and technology) already exist or reduce costs

- Single (or pair for high availability) interconnect for networking and storage I/O

- Reduction of power, cooling, floor space, and other green efficiency benefits

- Simplified cabling and reduced complexity for server network and storage interconnects

- Boosting servers performance to maximize I/O or mezzanine slots

- reduce I/O and data center bottlenecks

- Rapid re-deployment to meet changing workload and I/O profiles of virtual servers

- Scaling I/O capacity to meet high-performance and clustered application needs

- Leveraging common cabling infrastructure and physical networking facilities

Before going further, lets take a step backwards for a few moments.

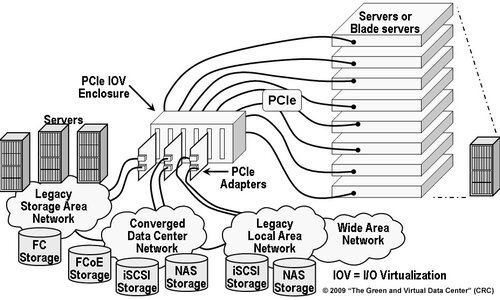

To say that I/O and networking demands and requirements are increasing is an understatement. The amount of data being generated, copied, and retained for longer periods of time is elevating the importance of the role of data storage and infrastructure resource management (IRM). Networking and input/output (I/O) connectivity technologies (figure 1) tie facilities, servers, storage tools for measurement and management, and best practices on a local and wide area basis to enable an environmentally and economically friendly data center.

TIERED ACCESS FOR SERVERS AND STORAGE

There is an old saying that the best I/O, whether local or remote, is an I/O that does not have to occur. I/O is an essential activity for computers of all shapes, sizes, and focus to read and write data in and out of memory (including external storage) and to communicate with other computers and networking devices. This includes communicating on a local and wide area basis for access to or over Internet, cloud, XaaS, or managed services providers such as shown in figure 1.

Figure 1 The Big Picture: Data Center I/O and Networking

The challenge of I/O is that some form of connectivity (logical and physical), along with associated software is required along with time delays while waiting for reads and writes to occur. I/O operations that are closest to the CPU or main processor should be the fastest and occur most frequently for access to main memory using internal local CPU to memory interconnects. In other words, fast servers or processors need fast I/O, either in terms of low latency, I/O operations along with bandwidth capabilities.

Figure 2 Tiered I/O and Networking Access

Moving out and away from the main processor, I/O remains fairly fast with distance but is more flexible and cost effective. An example is the PCIe bus and I/O interconnect shown in Figure 2, which is slower than processor-to-memory interconnects but is still able to support attachment of various device adapters with very good performance in a cost effective manner.

Farther from the main CPU or processor, various networking and I/O adapters can attach to PCIe, PCIx, or PCI interconnects for backward compatibility to support various distances, speeds, types of devices, and cost factors.

In general, the faster a processor or server is, the more prone to a performance impact it will be when it has to wait for slower I/O operations.

Consequently, faster servers need better-performing I/O connectivity and networks. Better performing means lower latency, more IOPS, and improved bandwidth to meet application profiles and types of operations.

Peripheral Component Interconnect (PCI)

Having established that computers need to perform some form of I/O to various devices, at the heart of many I/O and networking connectivity solutions is the Peripheral Component Interconnect (PCI) interface. PCI is an industry standard that specifies the chipsets used to communicate between CPUs and memory and the outside world of I/O and networking device peripherals.

Figure 3 shows an example of multiple servers or blades each with dedicated Fibre Channel (FC) and Ethernet adapters (there could be two or more for redundancy). Simply put the more servers and devices to attach to, the more adapters, cabling and complexity particularly for blade servers and dense rack mount systems.

Figure 3 Dedicated PCI adapters for I/O and networking devices

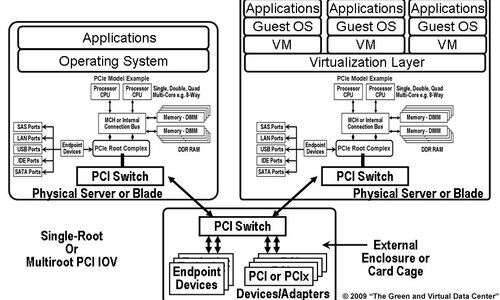

Figure 4 shows an example of a PCI implementation including various components such as bridges, adapter slots, and adapter types. PCIe leverages multiple serial unidirectional point to point links, known as lanes, in contrast to traditional PCI, which used a parallel bus design.

Figure 4 PCI IOV Single Root Configuration Example

In traditional PCI, bus width varied from 32 to 64 bits; in PCIe, the number of lanes combined with PCIe version and signaling rate determine performance. PCIe interfaces can have 1, 2, 4, 8, 16, or 32 lanes for data movement, depending on card or adapter format and form factor. For example, PCI and PCIx performance can be up to 528 MB per second with a 64 bit, 66 MHz signaling rate, and PCIe is capable of over 4 GB (e.g., 32 Gbit) in each direction using 16 lanes for high-end servers.

The importance of PCIe and its predecessors is a shift from multiple vendors’ different proprietary interconnects for attaching peripherals to servers. For the most part, vendors have shifted to supporting PCIe or early generations of PCI in some form, ranging from native internal on laptops and workstations to I/O, networking, and peripheral slots on larger servers.

The most current version of PCI, as defined by the PCI Special Interest Group (PCISIG), is PCI Express (PCIe). Backwards compatibility exists by bridging previous generations, including PCIx and PCI, off a native PCIe bus or, in the past, bridging a PCIe bus to a PCIx native implementation. Beyond speed and bus width differences for the various generations and implementations, PCI adapters also are available in several form factors and applications.

Traditional PCI was generally limited to a main processor or was internal to a single computer, but current generations of PCI Express (PCIe) include support for PCI Special Interest Group (PCI) I/O virtualization (IOV), enabling the PCI bus to be extended to distances of a few feet. Compared to local area networking, storage interconnects, and other I/O connectivity technologies, a few feet is very short distance, but compared to the previous limit of a few inches, extended PCIe provides the ability for improved sharing of I/O and networking interconnects.

I/O VIRTUALIZATION(IOV)

On a traditional physical server, the operating system sees one or more instances of Fibre Channel and Ethernet adapters even if only a single physical adapter, such as an InfiniBand HCA, is installed in a PCI or PCIe slot. In the case of a virtualized server for example, Microsoft HyperV or VMware ESX/vSphere the hypervisor will be able to see and share a single physical adapter, or multiple adapters, for redundancy and performance to guest operating systems. The guest systems see what appears to be a standard SAS, FC or Ethernet adapter or NIC using standard plug-and-play drivers.

Virtual HBA or virtual network interface cards (NICs) and switches are, as their names imply, virtual representations of a physical HBA or NIC, similar to how a virtual machine emulates a physical machine with a virtual server. With a virtual HBA or NIC, physical NIC resources are carved up and allocated as virtual machines, but instead of hosting a guest operating system like Windows, UNIX, or Linux, a SAS or FC HBA, FCoE converged network adapter (CNA) or Ethernet NIC is presented.

In addition to virtual or software-based NICs, adapters, and switches found in server virtualization implementations, virtual LAN (VLAN), virtual SAN (VSAN), and virtual private network (VPN) are tools for providing abstraction and isolation or segmentation of physical resources. Using emulation and abstraction capabilities, various segments or sub networks can be physically connected yet logically isolated for management, performance, and security purposes. Some form of routing or gateway functionality enables various network segments or virtual networks to communicate with each other when appropriate security is met.

PCI-SIG IOV

PCI SIG IOV consists of a PCIe bridge attached to a PCI root complex along with an attachment to a separate PCI enclosure (Figure 5). Other components and facilities include address translation service (ATS), single-root IOV (SR IOV), and multiroot IOV (MR IOV). ATS enables performance to be optimized between an I/O device and a servers I/O memory management. Single root, SR IOV enables multiple guest operating systems to access a single I/O device simultaneously, without having to rely on a hypervisor for a virtual HBA or NIC.

Figure 5 PCI SIG IOV

The benefit is that physical adapter cards, located in a physically separate enclosure, can be shared within a single physical server without having to incur any potential I/O overhead via virtualization software infrastructure. MR IOV is the next step, enabling a PCIe or SR IOV device to be accessed through a shared PCIe fabric across different physically separated servers and PCIe adapter enclosures. The benefit is increased sharing of physical adapters across multiple servers and operating systems not to mention simplified cabling, reduced complexity and resource utilization.

Figure 6 PCI SIG MR IOV

Figure 6 shows an example of a PCIe switched environment, where two physically separate servers or blade servers attach to an external PCIe enclosure or card cage for attachment to PCIe, PCIx, or PCI devices. Instead of the adapter cards physically plugging into each server, a high performance short-distance cable connects the servers PCI root complex via a PCIe bridge port to a PCIe bridge port in the enclosure device.

In figure 6, either SR IOV or MR IOV can take place, depending on specific PCIe firmware, server hardware, operating system, devices, and associated drivers and management software. For a SR IOV example, each server has access to some number of dedicated adapters in the external card cage, for example, InfiniBand, Fibre Channel, Ethernet, or Fibre Channel over Ethernet (FCoE) and converged networking adapters (CNA) also known as HBAs. SR IOV implementations do not allow different physical servers to share adapter cards. MR IOV builds on SR IOV by enabling multiple physical servers to access and share PCI devices such as HBAs and NICs safely with transparency.

The primary benefit of PCI IOV is to improve utilization of PCI devices, including adapters or mezzanine cards, as well as to enable performance and availability for slot-constrained and physical footprint or form factor-challenged servers. Caveats of PCI IOV are distance limitations and the need for hardware, firmware, operating system, and management software support to enable safe and transparent sharing of PCI devices. Examples of PCIe IOV vendors include Aprius, NextIO and Virtensys among others.

InfiniBand IOV

InfiniBand based IOV solutions are an alternative to Ethernet-based solutions. Essentially, InfiniBand approaches are similar, if not identical, to converged Ethernet approaches including FCoE, with the difference being InfiniBand as the network transport. InfiniBand HCAs with special firmware are installed into servers that then see a Fibre Channel HBA and Ethernet NIC from a single physical adapter. The InfiniBand HCA also attaches to a switch or director that in turn attaches to Fibre Channel SAN or Ethernet LAN networks.

The value of InfiniBand converged networks are that they exist today, and they can be used for consolidation as well as to boost performance and availability. InfiniBand IOV also provides an alternative for those who do not choose to deploy Ethernet.

From a power, cooling, floor-space or footprint standpoint, converged networks can be used for consolidation to reduce the total number of adapters and the associated power and cooling. In addition to removing unneeded adapters without loss of functionality, converged networks also free up or allow a reduction in the amount of cabling, which can improve airflow for cooling, resulting in additional energy efficiency. An example of a vendor using InfiniBand as a platform for I/O virtualization is Xsigo.

General takeaway points include the following:

- Minimize the impact of I/O delays to applications, servers, storage, and networks

- Do more with what you have, including improving utilization and performance

- Consider latency, effective bandwidth, and availability in addition to cost

- Apply the appropriate type and tiered I/O and networking to the task at hand

- I/O operations and connectivity are being virtualized to simplify management

- Convergence of networking transports and protocols continues to evolve

- PCIe IOV is complimentary to converged networking including FCoE

Moving forward, a revolutionary new technology may emerge that finally eliminates the need for I/O operations. However until that time, or at least for the foreseeable future, several things can be done to minimize the impacts of I/O for local and remote networking as well as to simplify connectivity.

PCIe Fundamentals Server Storage I/O Network Essentials

Learn more about IOV, converged networks, LAN, SAN, MAN and WAN related topics in Chapter 9 (Networking with your servers and storage) of The Green and Virtual Data Center (CRC) as well as in Resilient Storage Networks: Designing Flexible Scalable Data Infrastructures (Elsevier).

Ok, nuff said.

Cheers gs

Greg Schulz – Author Cloud and Virtual Data Storage Networking (CRC Press), The Green and Virtual Data Center (CRC Press) and Resilient Storage Networks (Elsevier)

twitter @storageio

All Comments, (C) and (TM) belong to their owners/posters, Other content (C) Copyright 2006-2026 Server StorageIO and UnlimitedIO LLC All Rights Reserved